Monday, October 19, 2015

Intrinsic rewards and grinding in video games

In one of my earliest blog posts, I talked about my model for intrinsic rewards in video games. The basic idea is that many games create a loop, such when you follow the loop the reward for an action is being able to do that action more, and in a more challenging way. Do something to do that thing more is an intrinsic reward: do something to get something else is an extrinsic reward. Seems simple, but gets really messy when applied to different game genres, and my recent experience with Fallout Shelter made me realize this needs a deeper look. This blog post will talk through how intrinsic rewards play out in different games, in particular how they are related to the issue of grinding and meaningful gameplay.

Friday, March 13, 2015

Bloom's taxonomy in NGSS (part 3/3)

This article is cross-posted on the BrainPOP Educator blog.

Inspired by Dan Meyer's analysis, I wrote two previous posts on the Common Core Math and Common Core ELA standards. Both broke down the standards on a task by task basis, and coded each task according to Bloom's taxonomy. In this part 3, we're going to turn to the NGSS and see how it ranks up.

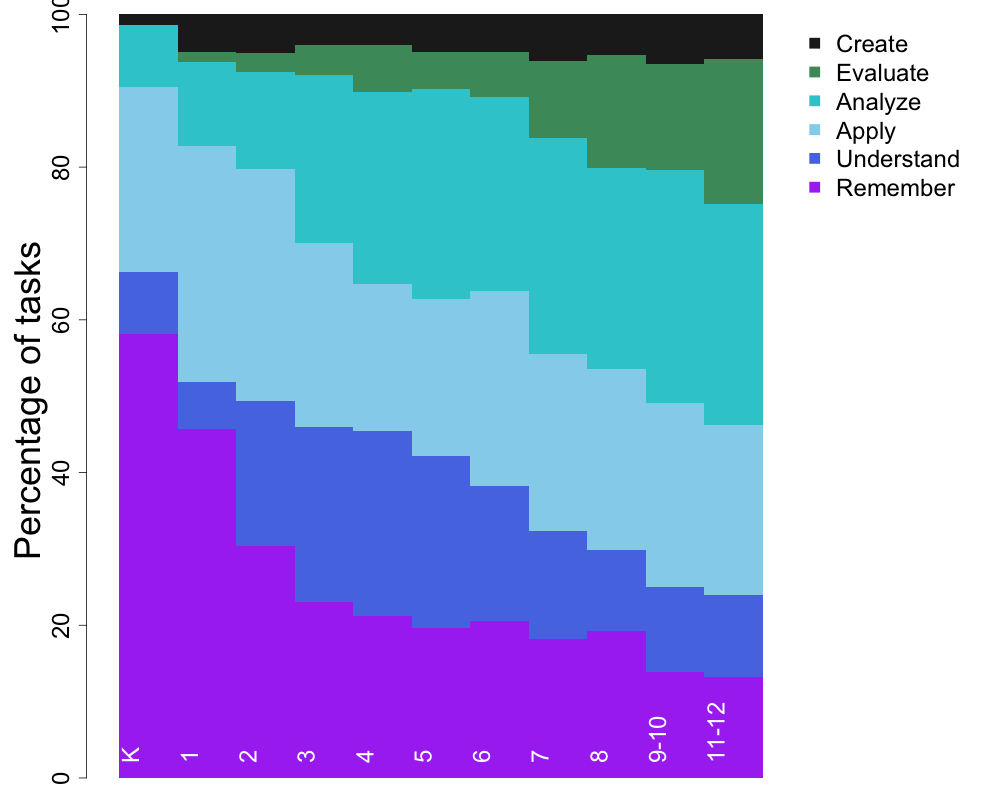

In addition to coding the specific NGSS grade bands (which are shown on the left of both graphs), I also decided to code the framework itself (the right side of both graphs). The Framework was comprehensive enough to be able to separately code the tasks in each of the Framework's three components: 1) the Science and Engineering Practices, 2) the Crosscutting Concepts, and 3) the Disciplinary Core Ideas (I did break up the DCI by grades). Of course, the components are not expected to be taught in isolation even though I am coding them this way, but it is interesting to note differences in each component. The top graph shows the number of tasks, and the bottom shows the same info by percentages.

First, general patterns. Unsurprisingly, the total number of tasks goes up with grade. Second, there is a slight trend towards higher level skills at higher grades (though it's not nearly as strong as the ELA standards). Third, the Framework's components are very similar to what might be expected. The DCIs are unsurprisingly focused mostly on remember tasks. The Practices, on the other hand, emphasize a fair proportion of tasks across the spectrum. The Crosscutting Concepts most emphasize analyze tasks.

The most interesting patterns involve the gradebands, and their comparison to the Framework. The gradebands show a clear preference for higher level skills, for all grades. Create tasks start as early as kindergarten, and persist through high school. The main pattern across grades is that Remember tasks (primarily making observations) is strongest in kindergarten and fades in later grades. The most striking feature of the gradebands is that they most closely resemble the Science and Engineering Practices in their distribution of tasks. In other words, the Framework claims that the Practices are suppose to underlie all of the gradeband standards, and that seems to hold out surprisingly well. This is an especially interesting comparison to the CC Mathematical Practices, whose distribution of tasks carried little resemblance to the gradebands themselves. The traditional conception of science as a collection of facts is shown in the DCI's, but does not reflect itself in the gradebands.

The NGSS are certainly setting a high bar for what they expect from students in terms of science performance in school, and according to this analysis the bar sits at a higher level of Bloom's taxonomy than either Common Core standard.

That's it, part 3 of 3! Please, let me know your thoughts in the comments below.

Some grade-specific standards contained multiple parts, or multiple tasks that they required from students. I coded each "task" required from students, which usually was one per standard, but sometimes was multiple tasks per standard.

For the gradebands, I coded the "performance expectations," or the things that followed "Students who demonstrate understanding can:"

For the Framework, I had to be a little more creative. For the Science and Engineering Practices, each practice has a section entitled "Goals" that starts with the phrase "By grade 12, students should be able to" and had a series of bullet points. I coded each of those bullet points. For the Crosscutting Concepts, I coded the short description of each concept on page 84 of the Framework. There was a section labelled "Progression" which described how the treatment of the concepts would change over time, but it was hard to code a clear number of tasks from that description. This led to a small number of total tasks, but a reliable relative percentage of tasks. For the Disciplinary Core Ideas, I coded the "Grade Band Endpoints," which were subdivided into 4 categories for different grade level. This again produced a somewhat artificially high number of tasks, as each sentence from a several paragraph description was coded separately, but the relative proportions are reliable.

Each gradeband started with a verb that was relatively clearly related to some level of blooms, and allow for easy, direct coding. The only sequence that I had to interpret a bit was "Developing a model to..." This most resembled a create task, especially when the thing being modeled is sufficiently complex and there are multiple potential ways to model it. But when the model was relatively simple and defined (like the water cycle), this felt much more like an analyze task- just finding and sorting all the obvious and finite pieces, rather than creating or synthesizing something new and novel from a large and complex assemblage of parts. I used my best judgement to distinguish between Analyze and Create for that verb sequence.

Inspired by Dan Meyer's analysis, I wrote two previous posts on the Common Core Math and Common Core ELA standards. Both broke down the standards on a task by task basis, and coded each task according to Bloom's taxonomy. In this part 3, we're going to turn to the NGSS and see how it ranks up.

Next Generation Science Standards

First, general patterns. Unsurprisingly, the total number of tasks goes up with grade. Second, there is a slight trend towards higher level skills at higher grades (though it's not nearly as strong as the ELA standards). Third, the Framework's components are very similar to what might be expected. The DCIs are unsurprisingly focused mostly on remember tasks. The Practices, on the other hand, emphasize a fair proportion of tasks across the spectrum. The Crosscutting Concepts most emphasize analyze tasks.

The most interesting patterns involve the gradebands, and their comparison to the Framework. The gradebands show a clear preference for higher level skills, for all grades. Create tasks start as early as kindergarten, and persist through high school. The main pattern across grades is that Remember tasks (primarily making observations) is strongest in kindergarten and fades in later grades. The most striking feature of the gradebands is that they most closely resemble the Science and Engineering Practices in their distribution of tasks. In other words, the Framework claims that the Practices are suppose to underlie all of the gradeband standards, and that seems to hold out surprisingly well. This is an especially interesting comparison to the CC Mathematical Practices, whose distribution of tasks carried little resemblance to the gradebands themselves. The traditional conception of science as a collection of facts is shown in the DCI's, but does not reflect itself in the gradebands.

The NGSS are certainly setting a high bar for what they expect from students in terms of science performance in school, and according to this analysis the bar sits at a higher level of Bloom's taxonomy than either Common Core standard.

That's it, part 3 of 3! Please, let me know your thoughts in the comments below.

Notes on Methodology

In case you are curious how I did this analysis, here some details.Some grade-specific standards contained multiple parts, or multiple tasks that they required from students. I coded each "task" required from students, which usually was one per standard, but sometimes was multiple tasks per standard.

For the gradebands, I coded the "performance expectations," or the things that followed "Students who demonstrate understanding can:"

For the Framework, I had to be a little more creative. For the Science and Engineering Practices, each practice has a section entitled "Goals" that starts with the phrase "By grade 12, students should be able to" and had a series of bullet points. I coded each of those bullet points. For the Crosscutting Concepts, I coded the short description of each concept on page 84 of the Framework. There was a section labelled "Progression" which described how the treatment of the concepts would change over time, but it was hard to code a clear number of tasks from that description. This led to a small number of total tasks, but a reliable relative percentage of tasks. For the Disciplinary Core Ideas, I coded the "Grade Band Endpoints," which were subdivided into 4 categories for different grade level. This again produced a somewhat artificially high number of tasks, as each sentence from a several paragraph description was coded separately, but the relative proportions are reliable.

Each gradeband started with a verb that was relatively clearly related to some level of blooms, and allow for easy, direct coding. The only sequence that I had to interpret a bit was "Developing a model to..." This most resembled a create task, especially when the thing being modeled is sufficiently complex and there are multiple potential ways to model it. But when the model was relatively simple and defined (like the water cycle), this felt much more like an analyze task- just finding and sorting all the obvious and finite pieces, rather than creating or synthesizing something new and novel from a large and complex assemblage of parts. I used my best judgement to distinguish between Analyze and Create for that verb sequence.

Wednesday, March 4, 2015

Bloom's taxonomy in Common Core ELA (part 2/3)

This article is cross-posted on the BrainPOP Educator blog.

In the last blog post, I was inspired by Dan Meyer's technique and broke the Common Core in discrete tasks, grouped by Bloom's taxonomy. In this part 2, I'm continuing the analysis and looking at the Common Core ELA. Part 3, including the NGSS, is up next!

So why such a difference between Math and ELA? It might have to do with the different nature of the subjects. Math is a series of inter-related but discrete topics. Each grade introduces new topics, with new vocabulary and techniques that require scaffolding. ELA, on the other hand, is a relatively small set of skills (reading, writing, listening, speaking), all of which are practiced at all grade levels. What changes is how those skills are expected to be practiced.

UPDATE- You can also check out Part 3 of this series.

Some grade-specific standards contained multiple parts, or multiple tasks that they required from students. I coded each "task" required from students, which usually was one per standard, but sometimes was multiple tasks per standard.

The standards themselves note that they are overlapping, in that the same content is emphasized and described in different ways in multiple standards. By my analysis, this would give those kinds of semi-repeated tasks more weight, as they would be counted multiple times for each of the various places they appeared. I decided this was ok- things that were more crucial tended to be more often repeated, and therefore should get more weight.

The ELA standards were often very repetitive across grades. Higher grades would often require the same task, but in a more rigorous way. Typically some kind of adjective or adverb would be added to the sentence to describe exactly how the rigor was suppose to be added. For my purposes, making the task more "rigorous" by adding an adjective or adverb qualifier did not change how I coded the task.

In the last blog post, I was inspired by Dan Meyer's technique and broke the Common Core in discrete tasks, grouped by Bloom's taxonomy. In this part 2, I'm continuing the analysis and looking at the Common Core ELA. Part 3, including the NGSS, is up next!

Common Core ELA

So, what the Common Core ELA standards. Once again the top figure shows the percentage of tasks in each level of Bloom's taxonomy, whereas the lower graph shows pure numbers of standards.

The ELA standards have a very different story from the Math standards [link]. The number of tasks does increase with higher grade levels, but not as sharply as the math standards do. On the other hand, there is a clear progression towards higher level skills at higher grades. Analyze tasks become important from grade 3 onwards, and Evaluate tasks become important from grade 7 onwards. Understanding is a an important task between grades 2-6, but then starts to disappear from 6 grade onwards in favor of more Analyze and Evaluate tasks. In general, in higher grades simple comprehension is not enough- one is expected to not only comprehend but to compare and place texts in larger contexts.So why such a difference between Math and ELA? It might have to do with the different nature of the subjects. Math is a series of inter-related but discrete topics. Each grade introduces new topics, with new vocabulary and techniques that require scaffolding. ELA, on the other hand, is a relatively small set of skills (reading, writing, listening, speaking), all of which are practiced at all grade levels. What changes is how those skills are expected to be practiced.

UPDATE- You can also check out Part 3 of this series.

Notes on Methodology

In case you are curious how I did this analysis, here some details.Some grade-specific standards contained multiple parts, or multiple tasks that they required from students. I coded each "task" required from students, which usually was one per standard, but sometimes was multiple tasks per standard.

The standards themselves note that they are overlapping, in that the same content is emphasized and described in different ways in multiple standards. By my analysis, this would give those kinds of semi-repeated tasks more weight, as they would be counted multiple times for each of the various places they appeared. I decided this was ok- things that were more crucial tended to be more often repeated, and therefore should get more weight.

The ELA standards were often very repetitive across grades. Higher grades would often require the same task, but in a more rigorous way. Typically some kind of adjective or adverb would be added to the sentence to describe exactly how the rigor was suppose to be added. For my purposes, making the task more "rigorous" by adding an adjective or adverb qualifier did not change how I coded the task.

Monday, February 23, 2015

Bloom's taxonomy in the Common Core Math (part 1/3)

This article is cross-posted on the BrainPOP Educator blog.

Recently, Dan Meyer posted an analysis of what kinds of tasks are expected of students on the Khan Academy and how it compared to the Common Core. Dan used a recently released sample test from the SBAC to describe what the Common Core required, but we were inspired to take Dan's analysis one step further. Instead of interpreting SBAC's interpretation of the Common Core's interpretation of what students should do, we decided to go right to the core, the Common Core. What exact kind of tasks is the Common Core requiring of students? And how do those requirements change across grades?

But, we needed a categorization system to group different kinds of student tasks. Dan used verbs to categorize tasks, but some verbs actually call for similar mental processes, and so probably should be grouped together rather than separated. Additionally some verbs in the standards, like "understand" can have loaded meanings and depending on their context refer to higher or lower order cognitive tasks. To accommodate both of these issues, we grouped tasks using everyone's favorite categorization system, Bloom's taxonomy.

So, this lead to a relatively large task, of going through every standard in every grade and tagging it with one or more levels of Bloom's taxonomy. Our hope is that by doing this kind of work, we can better understand what kind of tasks the Common Core is emphasizing. In this blog post, I'll look at the Common Core Math standards, look for the CCELA and NGSS in followup posts!

Another qualification- the Mathematical Practices are much more weighted towards high level tasks than the standards themselves as can be seen in the top figure, and these practices are meant to be infused across the grade level standards. Exactly how this infusing should occur is not clear, but that certainly would weight the scale towards higher level tasks.

UPDATE- You can also check out Part 2 and Part 3 of this series.

Some grade-specific standards contained multiple parts, or multiple tasks that they required from students. I coded each "task" required from students, which usually was one per standard, but sometimes was multiple tasks per standard.

The standards themselves note that they are overlapping, in that the same content is emphasized and described in different ways in multiple standards. By my analysis, this would give those kinds of semi-repeated tasks more weight, as they would be counted multiple times for each of the various places they appeared. I decided this was ok- things that were more crucial tended to be more often repeated, and therefore should get more weight.

The high school Math standards were not broken up by grade, they were broken up by subject. I felt they confused the graph more than added useful info, so I did not show them here. But I can say that the high school standards were similar to the middle school standards in mostly emphasizing lower order skills in similar percentages.

To refer back to the original point of Dan's article- the sample test released by the SBAC seems to be testing at a much, much higher level than the 8th grade Common Core standards seem to be calling for, potentially indicating that they had selectively released their best test items, and that the sample test is not a representative example of the test as a whole. Also, the Khan Academy is actually doing quite well compared to the standards themselves! If anything, the Khan Academy tasks were at a higher average level of Bloom's taxonomy than the tasks described in 8th grade Common Core Math standards.

Recently, Dan Meyer posted an analysis of what kinds of tasks are expected of students on the Khan Academy and how it compared to the Common Core. Dan used a recently released sample test from the SBAC to describe what the Common Core required, but we were inspired to take Dan's analysis one step further. Instead of interpreting SBAC's interpretation of the Common Core's interpretation of what students should do, we decided to go right to the core, the Common Core. What exact kind of tasks is the Common Core requiring of students? And how do those requirements change across grades?

But, we needed a categorization system to group different kinds of student tasks. Dan used verbs to categorize tasks, but some verbs actually call for similar mental processes, and so probably should be grouped together rather than separated. Additionally some verbs in the standards, like "understand" can have loaded meanings and depending on their context refer to higher or lower order cognitive tasks. To accommodate both of these issues, we grouped tasks using everyone's favorite categorization system, Bloom's taxonomy.

So, this lead to a relatively large task, of going through every standard in every grade and tagging it with one or more levels of Bloom's taxonomy. Our hope is that by doing this kind of work, we can better understand what kind of tasks the Common Core is emphasizing. In this blog post, I'll look at the Common Core Math standards, look for the CCELA and NGSS in followup posts!

Common Core Math

First up, Common Core Math! The results are shown in the two figures below. The top figure shows the percentage of tasks in each level of Bloom's taxonomy, where the lower graph shows pure numbers. The first column in the bottom graph codes the "Mathematical Practices," which are intended to span across all standards, across all grades.

The first two things to notice are that the number of tasks required of students increases with each grade level, and that the distribution of tasks does not change much across grade levels. There is a transition between grades 3-4, in which there are less Understand and more Apply tasks. But in general, there is very little trend across grades. Additionally, for most grades the highest three levels of the taxonomy compromise only 10-35% of the total tasks. For the majority of mathematics tasks students are being asked to do, those tasks are at a relatively low level on Blooms. To be fair, the higher level tasks do take more time than low level tasks, so the allotment of classroom time will be more weighted towards higher level tasks than these standards indicate. But the majority of the standards text actually describe relatively low level tasks.Another qualification- the Mathematical Practices are much more weighted towards high level tasks than the standards themselves as can be seen in the top figure, and these practices are meant to be infused across the grade level standards. Exactly how this infusing should occur is not clear, but that certainly would weight the scale towards higher level tasks.

UPDATE- You can also check out Part 2 and Part 3 of this series.

Notes on Methodology

In case you are curious how I did this analysis, here some details.Some grade-specific standards contained multiple parts, or multiple tasks that they required from students. I coded each "task" required from students, which usually was one per standard, but sometimes was multiple tasks per standard.

The standards themselves note that they are overlapping, in that the same content is emphasized and described in different ways in multiple standards. By my analysis, this would give those kinds of semi-repeated tasks more weight, as they would be counted multiple times for each of the various places they appeared. I decided this was ok- things that were more crucial tended to be more often repeated, and therefore should get more weight.

The high school Math standards were not broken up by grade, they were broken up by subject. I felt they confused the graph more than added useful info, so I did not show them here. But I can say that the high school standards were similar to the middle school standards in mostly emphasizing lower order skills in similar percentages.

To refer back to the original point of Dan's article- the sample test released by the SBAC seems to be testing at a much, much higher level than the 8th grade Common Core standards seem to be calling for, potentially indicating that they had selectively released their best test items, and that the sample test is not a representative example of the test as a whole. Also, the Khan Academy is actually doing quite well compared to the standards themselves! If anything, the Khan Academy tasks were at a higher average level of Bloom's taxonomy than the tasks described in 8th grade Common Core Math standards.

Subscribe to:

Posts (Atom)